Social media can bring people together for good, but it can also connect supporters of terror, extremism and hate. Neil Johnson shows how physics can shed light on this darker side of our online world

Are you a member of a Facebook group? I belong to a couple, including one for jazz musicians interested in playing at local gigs. In fact, there are three billion active users of Facebook – that’s roughly half the planet – and each of them is typically a member of more than one Facebook group. So the chances are you’re in a Facebook group too.

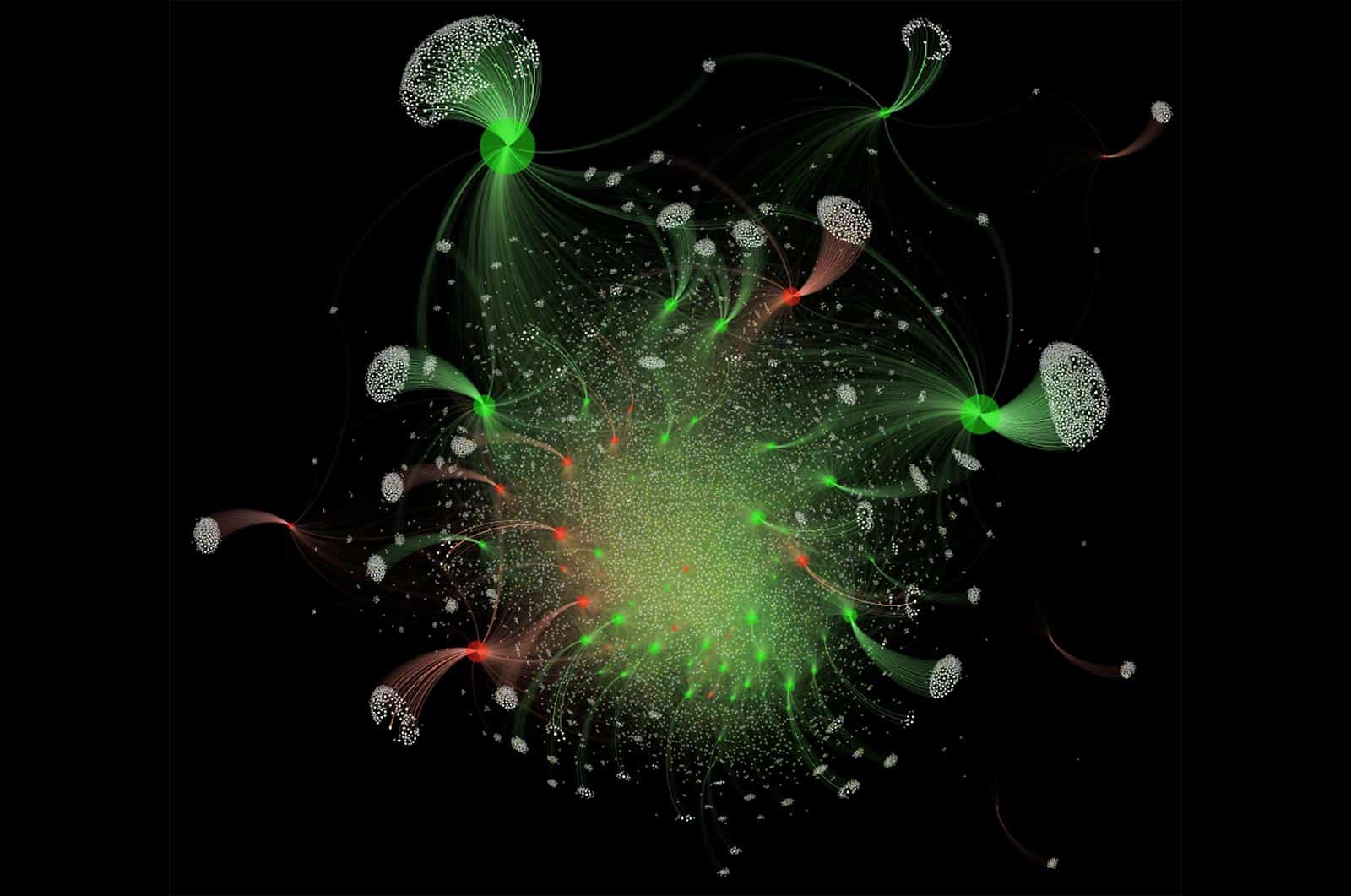

Facebook and its international competitors – such as VKontakte in Russia – purposely design their group features to bring people together into relatively tight-knit clusters so that they can focus on some shared interest or purpose (figure 1). Popular Facebook groups include one for fans of the actor Vin Diesel, another for those who love exotically flavoured crisps, and one for self-proclaimed SAHDs (stay-at-home-dads).

However, not all online groups (or their simpler cousins, “pages”) are as benign. That’s because social-media tools – just like any technology – can be used for bad as well as good. So while groups or pages can bring together people from across the planet who like crisps, they can also link those with a potential interest in far more dangerous activities such as terrorism, extremism and hate against a particular sector of society.

There are plenty of examples where online narratives have incited individuals to commit violent acts. On 8 March 2015, for example, there was a post on VKontakte in a group supporting jihadism and the so-called “Islamic State” (IS) that said, “[translation] IS are preparing to attack the city of Karbala [in Iraq], 500 tonnes of explosives are ready”. This was followed a few months later by the discovery of booby-trapped vehicles and IS members in a small town 80 km west of Karbala. Another example of an online group potentially influencing an individual to violence was the fatal stabbing of a black university student in Maryland, US, in May 2017, where the suspect – a white student at a neighbouring university – belonged to a Facebook group called “Alt-Reich: Nation”, which featured white-supremacist content.

The challenge

But could we turn these examples on their head and use such social-media activity to foresee horrible real-world events? That might seem unlikely, given that such attacks appear to come from out of the blue, carried out by “lone-wolf” individuals with no criminal record. And with billions of online users, detecting who will act sounds like looking for a needle in a haystack – especially as, prior to any attack, each “needle” may be effectively indistinguishable from any other straw of “hay”.

This was the problem that I and my colleagues, Pedro Manrique and Minzhang Zheng from the University of Miami, began grappling with back in 2011. That year we had joined a multidisciplinary team that included computer scientists from HRL Laboratories in Malibu and social scientists from Harvard, Boston and Northeastern universities, to take part in the Open Source Indicators (OSI) challenge run by US Intelligence Advanced Research Projects Activity (IARPA).

IARPA’s research question sounded simple on the surface: if you have access (as we all do) to all the public information available on the Internet, can you provide reliable warnings about future societal activity such as civil unrest and violence? With various countries in Latin America acting as a test-bed, our task was to predict the date, location, cause and level of violence of such events. Run as a competition against other combined university–industry teams, all predictions were submitted electronically in real time and later scored by IARPA according to whether the event actually happened and how the details played out compared to the prediction.

We, like all the teams, initially assumed that the answer would lie in the Twitter activity of users. After all, the challenge took place just after the 2010 “Arab Spring” – a series of anti-government protests and armed rebellions across the Middle East – when it had been claimed that Twitter was being used to co-ordinate individuals for street protests. We did indeed find Tweets of this nature – but there were far too many compared to the actual events, meaning that the number of false alarms was huge. As a result, the scores of all teams remained modest.

Then things got worse. Along came the “Brazilian Spring” in 2013 – a huge spate of large-scale street protests that broke out unexpectedly around a range of social and political concerns. All the Twitter-based models, however, had missed this completely. Indeed, the Twitter feeds had looked fairly typical prior to the onset. Where, if any, was the online precursor signal ahead of the offline riots?

Physics patterns

The other teams, who were primarily engineers and computer scientists, immediately turned their attention to finding the individuals whose Twitter feeds had acted as the trigger for these unpredicted protests. In other words, they went in search of a guilty needle in the huge haystack of Tweets – assuming implicitly that there was one. We instead decided to take a step back and think of the underlying physics.

Physics tells us that large-scale changes in a physical system – like water “suddenly” boiling – cannot properly be understood in terms of what a single member molecule is doing. Instead, the answer lies in the collective, “many-body” behaviour – the correlations that develop during the build-up, between molecules from across the entire system. When a system approaches the phase-change, these correlations begin to cluster, and the number and size of these correlation clusters escalates. The precursor signal therefore lies not in the needles themselves, but in how they cluster in time. On social media, by analogy, the precursor can be found in online groups – not with the individuals themselves. Each group, after all, is nothing but a cluster of correlated individuals (figure 1).

With this thinking in mind, we went back and studied Facebook groups in the build-up to the Brazil Spring. And there was the precursor signal we had been looking for – an escalation in the number of Facebook groups (i.e. correlation clusters) debating and discussing disagreements with particular policies and issues. Moreover, instead of a single group growing in size and hence being responsible, we found that the signal lay in the pattern the groups were creating across the system. So just as water starts bubbling feverishly as it approaches its boiling point, the creation of Facebook groups begins to escalate.

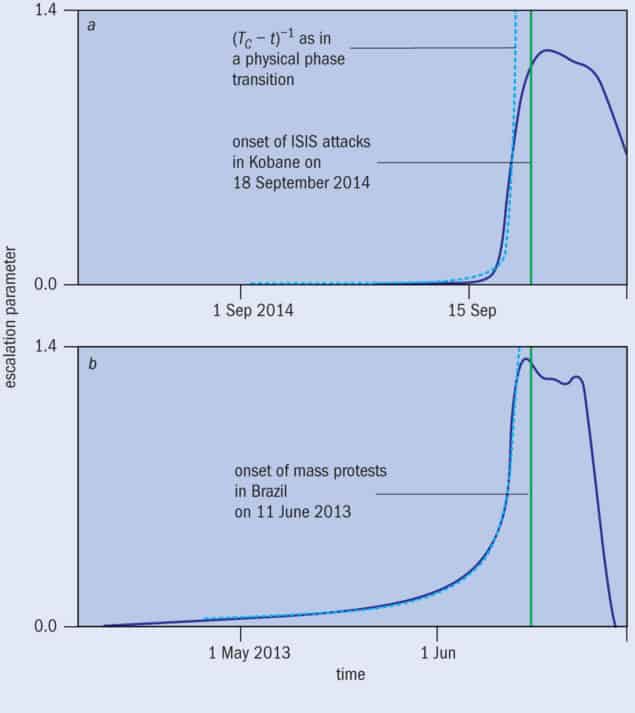

Our 2016 Science paper (352 1459) showed that the escalation rate of Facebook-group creation follows an inverse algebraic divergence (figure 2) as the onset approaches, in a way that is mathematically identical to a physical phase transition – but with the crucial new feature that this is an escalation in time as opposed to an escalation in an external control variable such as temperature. It therefore represents a new piece of physics: a dynamical phase transition in an out-of-equilibrium system.

As well as revealing new physics, this experience taught us an invaluable lesson about social media that paved the way for our subsequent understanding of online support for terrorism, extremism and hate. Unlike online Facebook groups where in-depth discussions can develop organically over time, Twitter acts more like a platform for shout-outs. Just as you probably would not be convinced to change your opinion about a complex issue such as Brexit simply by what an individual shouts out on a busy high street, nor do groups of people gravitate toward collective opinions or actions on complex issues because of individual Tweets. Fans of flavoured crisps, as well as stay-at-home dads, seek an exchange of opinions and advice through social-media groups, not Twitter shouts – and so too do people with a common enemy such as the West, immigrants, or people of a different race, religion or gender.

We therefore expanded our study to look at other forms of shared hatred – not against a political system, but against the West as a whole. It was now early 2014 and IS was starting to develop. Immediately it became clear to us that Facebook was doing a good job of shutting down groups developing extreme pro-IS narratives – a good thing, but bad for our research. However, we did find them on VKontakte – a social-media platform based in Russia that hosts almost one billion people worldwide. Like Facebook, VKontakte has a group tool that enables people with common interests to aggregate together online. However, unlike Facebook, VKontakte is less able to find and quickly shut down extremist and violent groups, making it the “go-to” place for many who wish to share such opinions. Indeed, the platform appears to have been a crucial tool for IS recruitment, particularly among university students, and we found a near-identical algebraic escalation to the Brazil Spring in the pattern of pro-IS groups created prior to IS’s sudden and unexpected attack on Kobane, Syria, in September 2014.

People, not molecules

So, job done? No. This was still a systems-level theory – like thermodynamics – and did not explicitly include the fact that humans, unlike water molecules, are all different. The implicit physics assumption of identical particles therefore invalidates the use of any many-body theories to explain collective human behaviour.

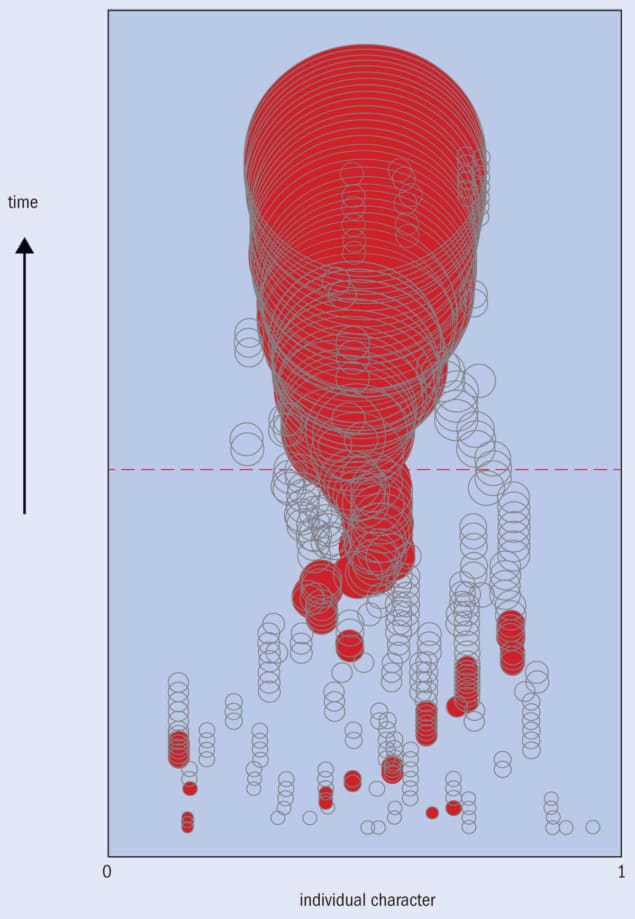

To get a “many-people” theory, we would have to do something that no many-body physics theory had ever done. We could not assume humans are like, say, unconscious, interchangeable atoms but had to include the heterogeneity of living, thinking people. Our hypothesis was that we could take a “mesoscopic” perspective where we sacrifice specific details of each individual in order to capture the overall diversity of the population. Inspired by how wildlife diversity is used to shed light on an ecosystem’s development, we hoped that incorporating a “cartoon” representation – just the basic skeleton of the system – of human diversity might be similarly sufficient. We therefore allowed each human to have a certain “character” typified by a single number between 0 and 1. Though this sounds like a very restricted description for a human being, it turns out it matters little if this character is more complicated – like a multidimensional vector – since the key lies in allowing the individuals to be distributed fairly evenly across the character space between 0 and 1 (i.e. the population is diverse).

Making this simplification then allowed us to describe mathematically how the different characters manage to “gel” into groups. For this, we took inspiration from gelation theory, which is well established for identical particles and has been used to describe aggregation in a wide variety of physical systems, such as milk curdling when proteins form inter-molecular bonds. As expected, however, it fails to describe the online pro-IS group dynamics because it assumes that all particles are identical. But as shown in our 2018 paper (Phys. Rev. Lett. 121 048301), our generalized gelation equations “with character” explain not only the timing of the onset of different groups forming, but also their wide range of growth patterns (figure 3). And when we added in the fact that groups that develop strong narratives in support of terrorism and extremism get shut down by social-media moderators, we obtained an almost perfect fit for the evolution of pro-IS groups online.

Human brain or social media?

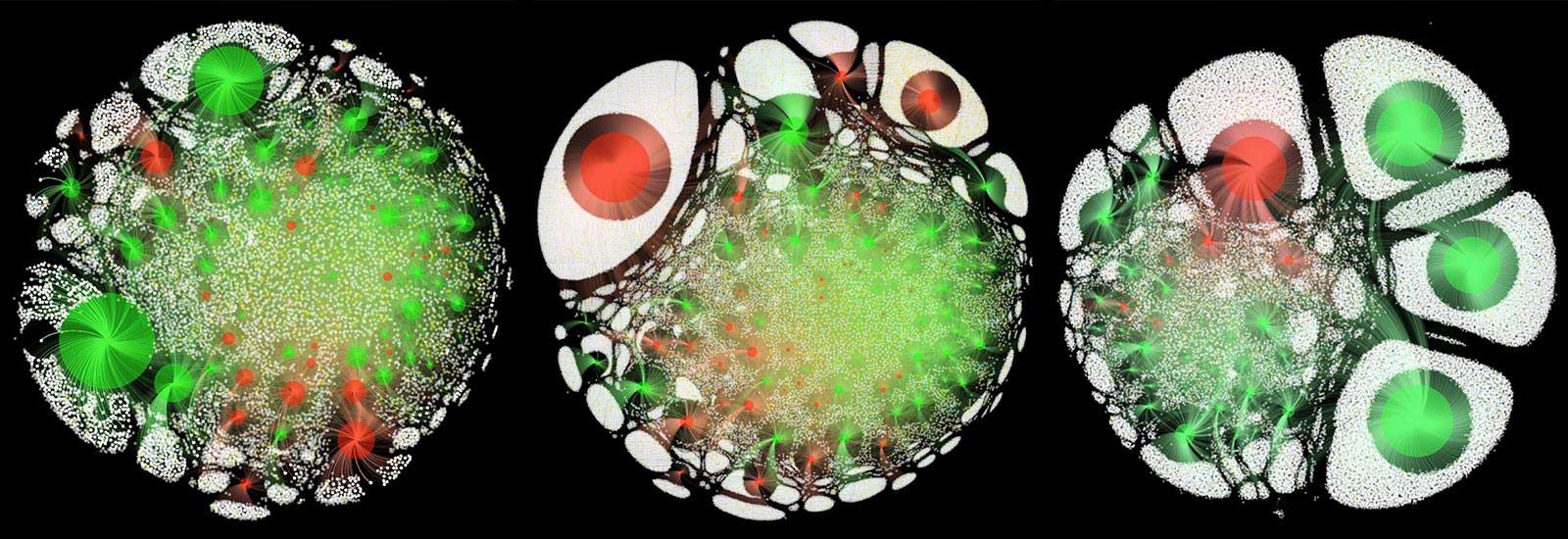

Having understood these moving parts, we could then provide the first ever picture of how a worldwide terror/extremism “organism” evolves in time online. Figure 4 shows snapshots throughout the organism’s lifespan: from its birth in 2014, through a period of rapid growth and evolution, to maturity in mid-2015, and then a gradual decay in activity toward old age and death as the pro-IS groups got shut down more aggressively and their members migrated to encrypted platforms such as Telegram. Not only does each picture look like a brain, but the network behaviour over time is remarkably similar to what is currently known about a brain network during a human’s lifetime.

It turns out that we had stumbled upon a very unlikely but precise connection between online terrorist support and the human brain. In this analogy, each online group acts as a “functional unit” like synapses in the brain, into which “structural units” – users or neurons – connect (figure 1). And just as neurons can engage in multiple synapses, users can be members of more than one online group.

The early stages of the pro-IS “brain” in figure 4 show a large amount of redundancy, with many different groups serving similar functions – just as in a real infant brain. Between infancy and maturity, some of these functional units begin to dominate, as in an early adult brain, and there is an optimal blend between specialization and synchrony in the system. By old age, several giant groups (functional units) dominate, but they share very few common users and hence lack overall synchrony – as in a human brain in old age. Moreover, the functional network (i.e. the network of groups) suffers a loss in small-world behaviour as it heads into old age, while the structural network (i.e. the network of users) shows the opposite trend – exactly as in an ageing human brain.

Inadvertently, our study of online support for terrorism/extremism has thrown up a new proxy for a human brain, with the advantage that its individual pieces and connections can be measured precisely over time from public Internet data – unlike its biological counterpart. This in turn makes it a potentially unique model for assessing “what-if” scenarios in a real brain, such as cutting out particular pieces, or delaying or stimulating growth of certain parts. Moreover, our latest work on “hate-speech” groups (arXiv:1811.03590) shows similar phenomena, suggesting that the way in which humans conduct clandestine, anti-societal and/or illicit activities online, follows a common pattern. This in turn may feed into the universality observed in other human contexts (see “Maths meets myths” by Ralph Kenna and Pádraig MacCarron Physics World June 2016).

There is of course much still to do. As you read this, there are undoubtedly individuals online who are developing the intent and capability to carry out further violent attacks. So how might such “many-people” physics theories help detect them before they act? Imagine you meet someone in your university and are interested in knowing the next step in their career. But instead of asking them their current thoughts and getting a potentially vague answer since they themselves may not yet know, you simply ask them what courses they have taken so far. This will then tell you the spectrum of things that they have been exposed to, and hence lets you narrow down what job they are likely to end up in – perhaps better than they themselves could at that stage. In an analogous way, such generalized many-body physics models, in the hands of security specialists, could play a similar role for terrorism, extremism and hate by seeing which individuals have passed through which groups and hence are likely to have the necessary intent and capability.

It is unlikely to be a perfect solution – it is definitely unconventional – but surely it is better than waiting for something horrific to happen before we take any action.